Recently I decided to build a NAS to store my RAW photos as well as video content, and to use as a backup target for my MacBook. I wanted something that I could use directly with low latency for interactive editing over 10 GBit ethernet, so spinning disks were out.

However, building an all SSD solution turned out to be surprisingly tricky - the old SATA standard is much too slow for modern SSDs, but getting more than a few PCIe NVMe ports on a consumer mainboard is quite difficult (I wanted to avoid server boards to minimize power consumption). On top of this, SATA SSDs tend to be more expensive as well!

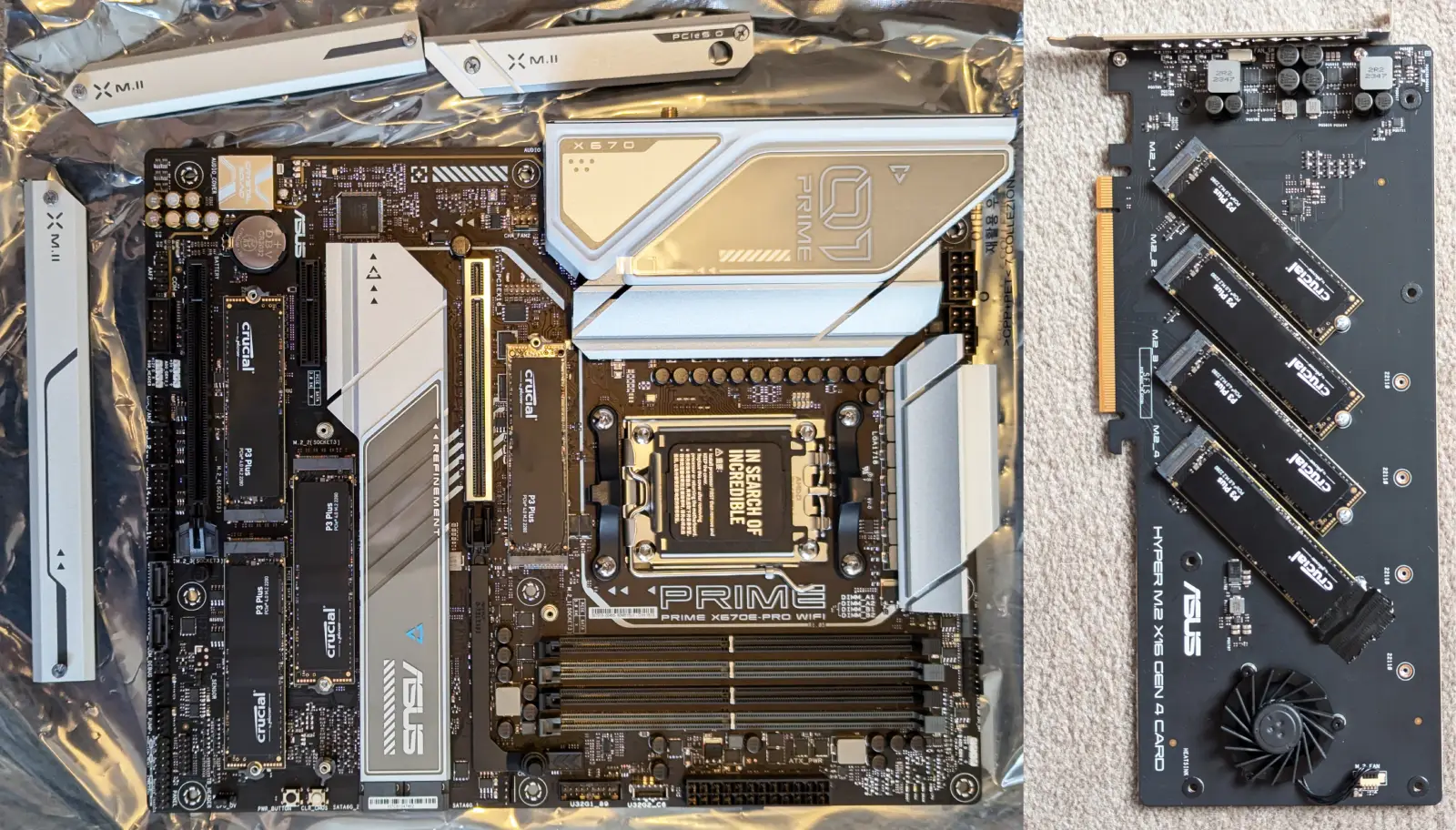

In the end I settled on an Asus Prime X670E-PRO mainboard with 4 NVMe slots, combined with an Asus Hyper M.2 X16 card for a futher 4 slots - giving me 8 NVMe slots in total, enough to make RAIDZ2 worthwhile. I used eight Crucial P3 Plus NVMe SSDs to fill the slots, chosen for being the cheapest high-quality NVMe SSDs at the time of my purchase:

I used fio to confirm the maximum bandwidth to each device, using the example commands from the Google Cloud documentation:

sudo fio --name=write_bandwidth_test \

--filename=/dev/nvme${i}n1 --filesize=4000G \

--time_based --ramp_time=2s --runtime=1m \

--ioengine=libaio --direct=1 --verify=0 --randrepeat=0 \

--numjobs=4 --thread --offset_increment=500G \

--bs=1M --iodepth=64 --rw=write \

--iodepth_batch_submit=64 --iodepth_batch_complete_max=64

As expected, all SSDs reached around 4 GiB/s, except the one SSD in the PCIe 3.0 x4 slot of the motherboard, which topped out around 2.7 GiB/s.

I also benchmarked writing to all eight SSDs simultaneously:

--filename=/dev/nvme1n1:/dev/nvme2n1:/dev/nvme3n1:/dev/nvme4n1:/dev/nvme5n1:/dev/nvme6n1:/dev/nvme7n1:/dev/nvme0n1

This hovered around 23.5GiB/s, close to the 30 GiB/s expected from writing to all disks independently - more than enough for my purpose.

ZFS - RAIDZ2

With the basics out of the way, it was time to test performance of reads and writes through ZFS. For simplicity I started with the default ZFS configuration suggested by TrueNAS SCALE.

To make my NAS more resilient and avoid common failure cases, my goal was to use ZFS with RAIDZ2. This stripes data across all disks in the pool to allow partial or complete failure of up to two disks without any data loss, at the cost of some storage capacity. In my case of eight SSDs with 3.64 TiB each I ended up with 20.57 TiB usable capacity, or roughly 70% - a cheap price to pay for so much more robustness!

Initial performance exceeded my expectations - I'm sure this can be optimized further, but it's already more than fast enough for network shares:

TEST_DIR=/mnt/data/benchmark

Sequential Write

sudo fio --name=write_throughput --directory=$TEST_DIR --numjobs=16 \

--size=10G --time_based --runtime=1m --ramp_time=2s --ioengine=libaio \

--direct=1 --verify=0 --bs=1M --iodepth=64 --rw=write \

--group_reporting=1 --iodepth_batch_submit=64 \

--iodepth_batch_complete_max=64

IOPS=5491, BW=5495MiB/s (5762MB/s)

Write throughput seems entirely CPU bound, all cores are fully utilized during this benchmark. No difference when re-testing with the default LZ4 compression disabled.

Random Write

sudo fio --name=write_iops --directory=$TEST_DIR --size=10G \

--time_based --runtime=1m --ramp_time=2s --ioengine=libaio --direct=1 \

--verify=0 --bs=4K --iodepth=256 --rw=randwrite --group_reporting=1 \

--iodepth_batch_submit=256 --iodepth_batch_complete_max=256

IOPS=44.1k, BW=172MiB/s (181MB/s)

In RAIDZ, IOPS are limited to the IOPS of the single slowest drive, as the chunks of a record need to be written to all drives.

Sequential Read

sudo fio --name=read_throughput --directory=$TEST_DIR --numjobs=16 \

--size=10G --time_based --runtime=1m --ramp_time=2s --ioengine=libaio \

--direct=1 --verify=0 --bs=1M --iodepth=64 --rw=read \

--group_reporting=1 \

--iodepth_batch_submit=64 --iodepth_batch_complete_max=64

IOPS=6635, BW=6653MiB/s (6976MB/s)

Random Read

sudo fio --name=read_iops --directory=$TEST_DIR --size=10G \

--time_based --runtime=1m --ramp_time=2s --ioengine=libaio --direct=1 \

--verify=0 --bs=4K --iodepth=256 --rw=randread --group_reporting=1 \

--iodepth_batch_submit=256 --iodepth_batch_complete_max=256

IOPS=99.7k, BW=389MiB/s (408MB/s)

Part List

If you want to replicate my build, the parts I used:

- AMD Ryzen 7 9700X

- Thermalright PS120SE

- Asus Prime X670E-PRO

- 2x Kingston Server Premier 16GB DDR5 (KSM56E46BS8KM-16HA)

- Asus Hyper M.2 X16 card

- 8x Crucial P3 Plus 4 TB (storage)

- 2x Crucial MX500 2 TB (boot drive)

- Cooler Master MWE 550 Gold

- Fractal Design North

Amazon links to disambiguate models, any affiliate proceeds will go to charity.