AlphaDev (blog)

Faster sorting algorithms discovered using deep reinforcement learning

Fundamental algorithms such as sorting or hashing are used trillions of times on any given day. As demand for computation grows, it has become critical for these algorithms to be as performant as possible. Whereas remarkable progress has been achieved in the past, making further improvements on the efficiency of these routines has proved challenging for both human scientists and computational approaches. Here we show how artificial intelligence can go beyond the current state of the art by discovering hitherto unknown routines. To realize this, we formulated the task of finding a better sorting routine as a single-player game. We then trained a new deep reinforcement learning agent, AlphaDev, to play this game. AlphaDev discovered small sorting algorithms from scratch that outperformed previously known human benchmarks. These algorithms have been integrated into the LLVM standard C++ sort library. This change to this part of the sort library represents the replacement of a component with an algorithm that has been automatically discovered using reinforcement learning. We also present results in extra domains, showcasing the generality of the approach.

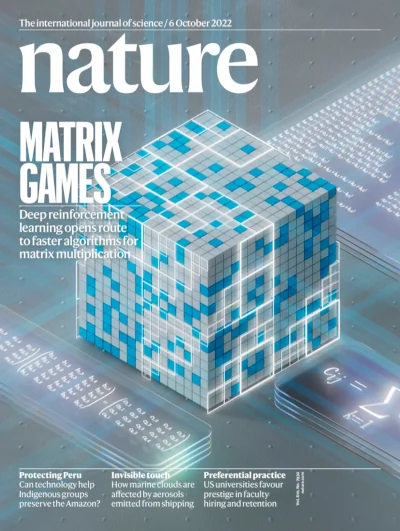

AlphaTensor (blog)

Discovering Matrix Multiplication Algorithms with AlphaTensor

Improving the efficiency of algorithms for fundamental computations can have a widespread impact, as it can affect the overall speed of a large amount of computations. Matrix multiplication is one such primitive task, occurring in many systems—from neural networks to scientific computing routines. We introduce a deep reinforcement learning approach based on AlphaZero for discovering efficient and provably correct algorithms for the multiplication of arbitrary matrices. Our agent, AlphaTensor, is trained to play a single-player game where the objective is finding tensor decompositions within a finite factor space. AlphaTensor discovered algorithms that outperform the state-of-the-art complexity for many matrix sizes. Particularly relevant is the case of 4x4 matrices in a finite field, where AlphaTensor’s algorithm improves on Strassen’s two-level algorithm for the first time, to our knowledge, since its discovery 50 years ago.

Gumbel MuZero

Policy improvement by planning with Gumbel

AlphaZero is a powerful RL algorithm, but it can fail to improve its policy network when not visiting all actions at the root of a search tree. To address this issue, we propose a policy improvement algorithm based on sampling actions without replacement. Furthermore, we use the idea of policy improvement to replace the more heuristic mechanisms by which AlphaZero selects and uses actions. Our new algorithm matches the state of the art on Go, chess, and Atari, and significantly improves performance when planning with few simulations.

Stochastic MuZero (pseudocode, blog)

Planning in Stochastic Environments with a Learned Model

In this paper we extend MuZero to learn and plan with stochastic models. We introduce a new algorithm, Stochastic MuZero, that learns a stochastic model incorporating afterstates, and uses this model to perform a stochastic tree search. Stochastic MuZero matched or exceeded the state of the art in canonical single and multi-agent environments, including 2048 and backgammon, while maintaining the same performance as standard MuZero in Go.

MuZero Rate Control (blog)

MuZero with Self-competition for Rate Control in VP9 Video Compression

We apply MuZero to video compression at YouTube, learning to select quantization parameters for the VP9 codec. We introduce a self-competition based reward mechanism to solve constrained RL with variable constraint satisfaction difficulty, achieveing an average 6.28% size reduction for a given video quality level.

AlphaCode (pdf, blog)

Competition-Level Code Generation with AlphaCode

We introduce a system for code generation that can create novel solutions to challenging competitive programming problems which require deep reasoning. Evaluated on recent Codeforces competitions, AlphaCode achieved an average ranking of top 54.3%. Three key components were key: (1) an extensive and clean competitive programming dataset for training and evaluation, (2) large and efficient-to-sample transformer-based architectures, and (3) large-scale model sampling to explore the search space, followed by filtering based on program behavior to a small set of submissions.

MuZero Unplugged (pseudocode, blog)

Online and Offline Reinforcement Learning by Planning with a Learned Model

We describe the Reanalyse algorithm, which uses model-based policy and value improvement operators to compute improved training targets for existing data points, allowing for efficient learning at data budgets varying by several orders of magnitude. We further show that Reanalyse can also be used to learn completely without environment interactions, as in the case of Offline Reinforcement Learning (Offline RL).

Sampled MuZero (blog)

Learning and Planning in Complex Action Spaces

We propose a general framework to reason in a principled way about policy evaluation and improvement over sets of sampled actions. Building on it, we propose Sampled MuZero, an extension of the MuZero algorithm that is able to learn in domains with arbitrarily complex action spaces by planning over sampled actions. We demonstrate this approach on the classical board game of Go and on two continuous control benchmark domains: DeepMind Control Suite and Real-World RL Suite.

MuZero (pdf, pseudocode, blog)

Mastering Atari, Go, Chess and Shogi by Planning with a Learned Model

Constructing agents with planning capabilities has long been one of the main challenges in the pursuit of artificial intelligence. Tree-based planning methods have enjoyed huge success in challenging domains, such as chess and Go, where a perfect simulator is available. However, in real-world problems, the dynamics governing the environment are often complex and unknown. Here we present the MuZero algorithm, which, by combining a tree-based search with a learned model, achieves superhuman performance in a range of challenging and visually complex domains, without any knowledge of their underlying dynamics. The MuZero algorithm learns an iterable model that produces predictions relevant to planning: the action-selection policy, the value function and the reward. When evaluated on 57 different Atari games - the canonical video game environment for testing artificial intelligence techniques, in which model-based planning approaches have historically struggled - the MuZero algorithm achieved state-of-the-art performance. When evaluated on Go, chess and shogi - canonical environments for high-performance planning - the MuZero algorithm matched, without any knowledge of the game dynamics, the superhuman performance of the AlphaZero algorithm that was supplied with the rules of the game.

Constructing agents with planning capabilities has long been one of the main challenges in the pursuit of artificial intelligence. Tree-based planning methods have enjoyed huge success in challenging domains, such as chess and Go, where a perfect simulator is available. However, in real-world problems, the dynamics governing the environment are often complex and unknown. Here we present the MuZero algorithm, which, by combining a tree-based search with a learned model, achieves superhuman performance in a range of challenging and visually complex domains, without any knowledge of their underlying dynamics. The MuZero algorithm learns an iterable model that produces predictions relevant to planning: the action-selection policy, the value function and the reward. When evaluated on 57 different Atari games - the canonical video game environment for testing artificial intelligence techniques, in which model-based planning approaches have historically struggled - the MuZero algorithm achieved state-of-the-art performance. When evaluated on Go, chess and shogi - canonical environments for high-performance planning - the MuZero algorithm matched, without any knowledge of the game dynamics, the superhuman performance of the AlphaZero algorithm that was supplied with the rules of the game.

AlphaZero (pdf)

A general reinforcement learning algorithm that masters chess, shogi and Go through self-play

The game of chess is the longest-studied domain in the history of artificial intelligence. The strongest programs are based on a combination of sophisticated search techniques, domain-specific adaptations, and handcrafted evaluation functions that have been refined by human experts over several decades. By contrast, the AlphaGo Zero program recently achieved superhuman performance in the game of Go by reinforcement learning from self-play. In this paper, we generalize this approach into a single AlphaZero algorithm that can achieve superhuman performance in many challenging games. Starting from random play and given no domain knowledge except the game rules, AlphaZero convincingly defeated a world champion program in the games of chess and shogi (Japanese chess) as well as Go.

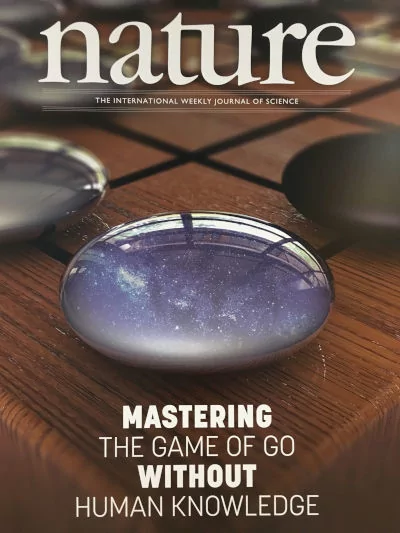

AlphaGo Zero (pdf)

Mastering the game of Go without human knowledge

A long-standing goal of artificial intelligence is an algorithm that learns, tabula rasa, superhuman proficiency in challenging domains. Recently, AlphaGo became the first program to defeat a world champion in the game of Go. The tree search in AlphaGo evaluated positions and selected moves using deep neural networks. These neural networks were trained by supervised learning from human expert moves, and by reinforcement learning from self-play. Here we introduce an algorithm based solely on reinforcement learning, without human data, guidance or domain knowledge beyond game rules. AlphaGo becomes its own teacher: a neural network is trained to predict AlphaGo’s own move selections and also the winner of AlphaGo’s games. This neural network improves the strength of the tree search, resulting in higher quality move selection and stronger self-play in the next iteration. Starting tabula rasa, our new program AlphaGo Zero achieved superhuman performance, winning 100–0 against the previously published, champion-defeating AlphaGo.

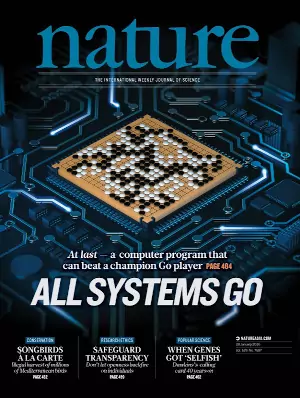

AlphaGo (pdf)

Mastering the game of Go with deep neural networks and tree search

The game of Go has long been viewed as the most challenging of classic games for artificial intelligence due to its enormous search space and the difficulty of evaluating board positions and moves. We introduce a new approach to computer Go that uses value networks to evaluate board positions and policy networks to select moves. These deep neural networks are trained by a novel combination of supervised learning from human expert games, and reinforcement learning from games of self-play. Without any lookahead search, the neural networks play Go at the level of state-of-the-art Monte-Carlo tree search programs that simulate thousands of random games of self-play. We also introduce a new search algorithm that combines Monte-Carlo simulation with value and policy networks. Using this search algorithm, our program AlphaGo achieved a 99% winning rate against the strongest Go programs, and defeated the human European champion by 5 games to 0. This is the first time that a computer program has defeated a human professional player in the full-sized game of Go, a feat previously believed to be at least a decade away.